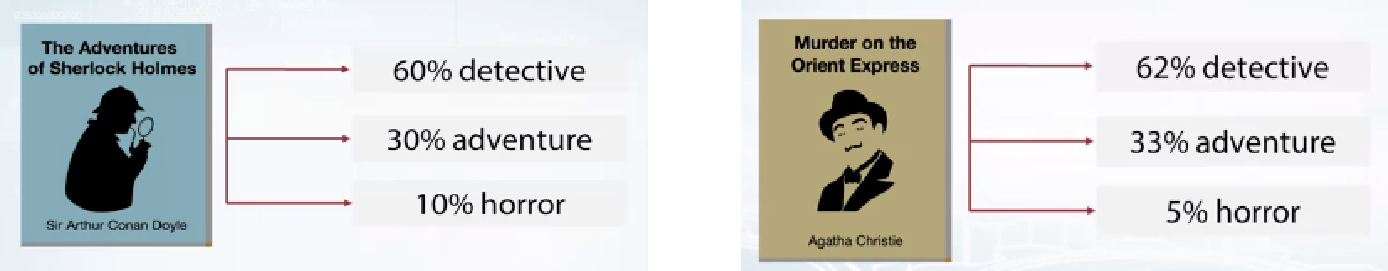

LDA算法常被用于将文档归类到几个不同的主题下(如下图所示),它是一种无监督算法。需要注意的是下图只是为了阐述概念,实际上LDA算法仅需要预先给定主题的个数,并不会给定每个主题对应的名称,每个主题对应的内容同样是在训练过程中得到的。

模型建立

- 假设共有I个文档和K个主题,定义矩阵\Phi为I行K列的矩阵,则矩阵的第i行为第i个文档来自这些主题的概率向量\vec{\phi_i},该向量的先验分布满足Dirichlet分布, 即Dir(\alpha_1,\alpha_2,\cdots,\alpha_K),Dirichlet分布的形式为p(\vec{\phi_i})=Dir(\vec{\phi_i} \mid \alpha_1,\alpha_2,\cdots,\alpha_K)=\frac{1}{B(\alpha_1,\alpha_2,\cdots,\alpha_K)}\prod_{k=1}^{K}{\Phi_{ik}}^{\small{\alpha}_{k}-1},\space\space其中\sum_{k=1}^K\Phi_{ik}=1

- 假设第i个文档中的单词数为N_i,定义第i个文档中第n个单词所属的主题为Z_{in}\space ,\space\space i=1,2,\cdots I,\space n=1,2,\cdots N_i

- 每个主题都是一个基于词汇表里所有词汇的概率分布。假设词汇表中单词的数量为M,定义矩阵\Theta为K行M列的矩阵,则在主题k下单词m出现的概率为\Theta_{km},并且满足\sum_{m=1}^M \Theta_{km}=1

- 定义第i个文档中的第n个单词在词汇表中的位置为W_{in}\space ,\space\space i=1,2,\cdots I,\space n=1,2,\cdots N_i\space。LDA求解的问题可归纳为已知文档数据集W,计算主题的词汇概率分布\Theta的最优解。可以使用最大似然估计求解,即\underset{\Theta}{\arg\max}\space p(W \mid \Theta),为方便求解,在计算过程中引入了两个潜变量(Latent Variable):第1、2步所述的\Phi和Z

- 引入潜变量之后的概率公式可写为\tag{1}\begin{aligned}p(W,\Phi,Z \mid \Theta) &=\prod_{i=1}^I p(\vec{\phi_i})\prod_{n=1}^{N_i}p(Z_{in}\mid\vec{\phi_i})p(W_{in}\mid Z_{in}) \\ &=\prod_{i=1}^I Dir(\vec{\phi_i} \mid \alpha_1,\alpha_2,\cdots,\alpha_K)\prod_{n=1}^{N_i}\Phi_{i,Z_{in}}\Theta_{Z_{in},W_{in}}\end{aligned}

使用EM算法求解

使用文章EM算法和变分推断介绍中提到的EM算法求解LDA,下面介绍求解过程中第t步迭代的E step和M step:

E step:求解关于潜变量\Phi和Z的分布\hat{q}(\Phi,Z),容易看出\hat{q}(\Phi ,Z) = p(\Phi ,Z\mid W,\Theta^{(t)}),在这里使用文章EM算法和变分推断介绍中提到的变分推断方法用q_1^*(\Phi)q_2^*(Z)近似求解\hat{q}(\Phi ,Z)。由公式(1)可得\tag{2}\ln p(W,\Phi,Z \mid \Theta^{(t)})=\sum_{i=1}^{I}\left[\sum_{k=1}^K (\alpha_k-1)\ln\Phi_{ik}+\sum_{n=1}^{N_i}(\ln \Phi_{i,Z_{in}}+\ln \Theta_{Z_{in},W_{in}}^{(t)})\right]+const

- 固定q_2^*(Z),计算q_1^*(\Phi),则有\tag{3} h_1(\Phi)=\mathbb E_{Z\sim \small q_2^*(Z)}[\ln p(W,\Phi,Z \mid \Theta^{(t)})]由于\mathbb E_{Z\sim \small q_2^*(Z)}\ln \Phi_{i,Z_{in}}=\sum_{k=1}^K p(Z_{in}=k)\ln \Phi_{ik},记\Gamma_{in}(k)=p(Z_{in}=k),上述公式(3)可写为:\tag{4} h_1(\Phi)=\sum_{i=1}^{I}\left[\sum_{k=1}^K \left(\alpha_k+\sum_{n=1}^{N_i}\Gamma_{in}(k)-1\right)\ln\Phi_{ik}\right]+const容易计算出\tag{5}q_1^*(\Phi)=\frac{e^{h_1(\Phi)}}{const}=\prod_{i=1}^{I}q_1^*(\vec{\phi_i})=\prod_{i=1}^{I}Dir\left(\vec{\phi_i} \mid \alpha_1+\sum_{n=1}^{N_i}\Gamma_{in}(1),\cdots,\alpha_K+\sum_{n=1}^{N_i}\Gamma_{in}(K)\right)

- 固定q_1^*(\Phi),计算q_2^*(Z),则有\tag{6} h_2(Z)=\mathbb E_{\Phi\sim \small q_1^*(\Phi)}[\ln p(W,\Phi,Z \mid \Theta^{(t)})]=\sum_{i=1}^I \sum_{n=1}^{N_i} \left[\ln \Theta_{Z_{in},W_{in}}^{(t)}+\mathbb{E}_{\vec{\phi_i}\sim \small q_1^*(\vec{\phi_i})}\left[\ln \Phi_{i,Z_{in}}\right]\right]容易看出\tag{7} q_2^*(Z)=\frac{e^{h_2(Z)}}{const}=\prod_{i=1}^{I}\prod_{n=1}^{N_i}q_2^*(Z_{in})其中q_2^*(Z_{in})为变量Z_{in}的概率分布,满足\tag{8} \Gamma_{in}(k)=p(Z_{in}=k)=q_2^*(k)=\frac{\Theta_{k,W_{in}}^{(t)}\exp\left(\mathbb{E}_{\vec{\phi_i}\sim \small q_1^*(\vec{\phi_i})}\left[\ln \Phi_{ik}\right]\right)}{\sum_{j=1}^K \Theta_{j,W_{in}}^{(t)}\exp\left(\mathbb{E}_{\vec{\phi_i}\sim \small q_1^*(\vec{\phi_i})}\left[\ln \Phi_{ij}\right]\right)}期望\mathbb{E}_{\vec{\phi_i}\sim \small q_1^*(\vec{\phi_i})}\left[\ln \Phi_{ik}\right]的计算可参见附录部分

- 首先对\underset{\underset{\underset{k=1,2,\cdots,K}{n=1,2,\cdots,N_i}}{i=1,2,\cdots,I}}{\Gamma_{in}(k)}进行初始化,然后根据公式(5)、(7)、(8)不断迭代计算q_1^*(\Phi)和q_2^*(Z)直至收敛,最终得到\hat{q}(\Phi ,Z)=q_1^*(\Phi)q_2^*(Z)

M step:因为有限制\sum_{m=1}^M \Theta_{km}=1,采用拉格朗日乘数法求解下述公式的最大值:\tag{9}\begin{aligned}\Theta^{(t+1)} &=\underset{\Theta}{\arg\max}\space\mathbb{E}_{\hat q}[\ln p(W,\Phi,Z \mid \Theta)]=\underset{\Theta}{\arg\max}\space\sum_{i=1}^I \sum_{n=1}^{N_i} \sum_{k=1}^K \Gamma_{in}(k)\ln\Theta_{k,W_{in}} \\ &= \underset{\Theta}{\arg\max}\left[\underbrace{\sum_{i=1}^I \sum_{n=1}^{N_i} \sum_{k=1}^K \Gamma_{in}(k)\ln\Theta_{k,W_{in}}+\sum_{k=1}^K \lambda_k (\sum_{m=1}^M \Theta_{km}-1)}_{\large L}\right]\end{aligned}对上式求导可得\frac{\partial L}{\partial\Theta_{km}}=\lambda_k+\sum_{i=1}^I\sum_{n=1}^{N_i}\frac{\Gamma_{in}(k)}{\Theta_{km}}\mathbb{I}(W_{in}=m)=0其中\mathbb{I}(W_{in}=m)当且仅当W_{in}=m时为1,其余情况均为0。结合公式\sum_{m=1}^M \Theta_{km}=1容易看出\tag{10} \Theta_{km}^{(t+1)}=\frac{\sum_{i=1}^I\sum_{n=1}^{N_i}\Gamma_{in}(k)\mathbb{I}(W_{in}=m)}{\sum_{j=1}^M\sum_{i=1}^I\sum_{n=1}^{N_i}\Gamma_{in}(k)\mathbb{I}(W_{in}=j)},\space\space k=1,2,\cdots,K,\space\space m=1,2,\cdots,M

不断对上述E step和M step迭代直至收敛,最终得到K个主题的词汇概率矩阵\Theta的最优值,以及在该最优值下计算的每个文档i的主题概率分布q_1^*(\vec{\phi_i})和文档i中每个单词n所属主题的概率分布q_2^*(Z_{in})

模型扩展

可以在上述模型中引入每个主题下所有词汇的先验概率分布,记\vec{\theta_k}为矩阵\Theta的第k行,即第k个主题下词汇的概率分布,它的先验分布可写为如下形式:p(\vec{\theta_k})=Dir(\vec{\theta_k}\mid \beta_1,\beta_2\cdots\beta_M)=\frac{1}{B(\beta_1,\beta_2,\cdots,\beta_M)}\prod_{m=1}^{M}{\Theta_{km}}^{{\beta}_{m}-1}则问题变为求解后验概率分布p(\Phi,\Theta,Z\mid W),此时有概率公式\small p(W,\Phi,\Theta,Z)=p(\Theta)p(W,\Phi,Z \mid \Theta)=\prod_{k=1}^K Dir(\vec{\theta_k}\mid \beta_1,\beta_2\cdots\beta_M)\prod_{i=1}^I Dir(\vec{\phi_i} \mid \alpha_1,\alpha_2,\cdots,\alpha_K)\prod_{n=1}^{N_i}\Phi_{i,Z_{in}}\Theta_{Z_{in},W_{in}}它的对数形式为\small \ln p(W,\Phi,\Theta,Z)=\sum_{k=1}^K \sum_{m=1}^M (\beta_m-1)\ln\Theta_{km}+\sum_{i=1}^{I}\left[\sum_{k=1}^K (\alpha_k-1)\ln\Phi_{ik}+\sum_{n=1}^{N_i}(\ln \Phi_{i,Z_{in}}+\ln \Theta_{Z_{in},W_{in}})\right]+const上述问题可直接使用变分推断求解,即求解p(\Phi,\Theta,Z\mid W)的最优近似分布q_1^*(\Phi)q_2^*(\Theta)q_3^*(Z):

- 固定q_2^*(\Theta)q_3^*(Z),计算q_1^*(\Phi),则有 h_1(\Phi)=\mathbb E_{\Theta,Z\sim \small q_2^*(\Theta)q_3^*(Z)}[\ln p(W,\Phi,\Theta,Z)]容易看出q_1^*(\Phi)的表示形式与公式(5)一致

- 固定q_1^*(\Phi)q_3^*(Z),计算q_2^*(\Theta),则有\begin{aligned}h_2(\Theta) &=\mathbb E_{\Phi,Z\sim \small q_1^*(\Phi)q_3^*(Z)}[\ln p(W,\Phi,\Theta,Z)] \\ &=\sum_{k=1}^K\sum_{m=1}^M \left[(\beta_m-1)\ln\Theta_{km}+\sum_{i=1}^{I}\sum_{n=1}^{N_i}\Gamma_{in}(k)\mathbb{I}(W_{in}=m)\ln \Theta_{km}\right]\end{aligned}容易看出\begin{aligned}q_2^*(\Theta) &=\frac{e^{h_2(\Theta)}}{const}=\prod_{k=1}^K q_2^*(\vec{\theta_k}) \\ &=\prod_{k=1}^K Dir\left(\vec{\theta_k}\mid \beta_1+\sum_{i=1}^{I}\sum_{n=1}^{N_i}\Gamma_{in}(k)\mathbb{I}(W_{in}=1),\cdots,\beta_M+\sum_{i=1}^{I}\sum_{n=1}^{N_i}\Gamma_{in}(k)\mathbb{I}(W_{in}=M)\right)\end{aligned}

- 固定q_1^*(\Phi)q_2^*(\Theta),计算q_3^*(Z),则有\begin{aligned}h_3(Z) &=\mathbb E_{\Phi,\Theta\sim \small q_1^*(\Phi)q_2^*(\Theta)}[\ln p(W,\Phi,\Theta,Z)] \\ &= \sum_{i=1}^{I}\sum_{n=1}^{N_i}\left[\mathbb{E}_{\vec{\phi_i}\sim \small q_1^*(\vec{\phi_i})}\left[\ln \Phi_{i,Z_{in}}\right]+\mathbb{E}_{\small \vec{\theta}_{Z_{in}}\sim q_2^*(\vec{\theta}_{Z_{in}})}\left[\ln \Theta_{Z_{in},W_{in}}\right]\right]\end{aligned}容易看出q_3^*(Z)=\frac{e^{h_3(Z)}}{const}=\prod_{i=1}^I\prod_{n=1}^{N_i}q_3^*(Z_{in})其中q_3^*(Z_{in})为变量Z_{in}的概率分布,满足\Gamma_{in}(k)=p(Z_{in}=k)=q_3^*(k)\propto \exp\left(\mathbb{E}_{\vec{\phi_i}\sim \small q_1^*(\vec{\phi_i})}\left[\ln \Phi_{ik}\right]+\mathbb{E}_{\small \vec{\theta}_{k}\sim q_2^*(\vec{\theta}_{k})}\left[\ln \Theta_{k,W_{in}}\right]\right)期望的计算过程可参见附录部分

- 首先对\underset{\underset{\underset{k=1,2,\cdots,K}{n=1,2,\cdots,N_i}}{i=1,2,\cdots,I}}{\Gamma_{in}(k)}进行初始化,然后不断迭代计算q_1^*(\Phi)、q_2^*(\Theta)和q_3^*(Z)直至收敛

附录

由Dirichlet分布的性质可知\begin{aligned}\small \mathbb{E}_{\vec{\phi_i}\sim \small q_1^*(\vec{\phi_i})}\left[\ln \Phi_{ik}\right] &=\Psi\left(\alpha_k+\sum_{n=1}^{N_i}\Gamma_{in}(k)\right)-\Psi\left(\sum_{j=1}^K \left[\alpha_j+\sum_{n=1}^{N_i}\Gamma_{in}(j)\right]\right) \\ &=\Psi\left(\alpha_k+\sum_{n=1}^{N_i}\Gamma_{in}(k)\right)-\Psi\left(N_i+\sum_{j=1}^K \alpha_j\right)=\Psi\left(\alpha_k+\sum_{n=1}^{N_i}\Gamma_{in}(k)\right)-const\end{aligned}以及\small \mathbb{E}_{\small \vec{\theta}_{k}\sim q_2^*(\vec{\theta}_{k})}\left[\ln \Theta_{k,W_{in}}\right]=\Psi\left(\beta_{W_{in}}+\sum_{i^\prime =1}^{I}\sum_{n^\prime =1}^{N_{i^\prime}}\Gamma_{i^\prime n^\prime}(k)\mathbb{I}(W_{i^\prime n^\prime}=W_{in})\right)-\Psi\left(\sum_{m=1}^M \left[\beta_m+\sum_{i^\prime =1}^{I}\sum_{n^\prime =1}^{N_{i^\prime}}\Gamma_{i^\prime n^\prime}(k)\mathbb{I}(W_{i^\prime n^\prime}=m)\right]\right)其中\Psi(x)为Digamma函数。